World of Great Minds

Albert Einstein rewrote the laws of nature. He completely changed the way we understand the behavior of things as basic as light, gravity, and time.

Although scientists today are comfortable with Einstein’s ideas, in his time, they were completely revolutionary. Most people did not even begin to understand them.

- Provided powerful evidence that atoms and molecules actually exist, through his analysis of Brownian motion.

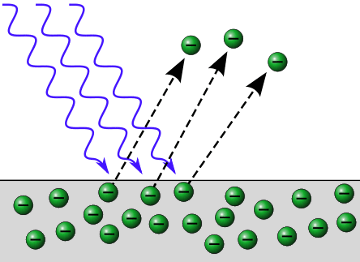

- Explained the photoelectric effect, proposing that light comes in bundles. Bundles of light (he called them quanta) with the correct amount of energy can eject electrons from metals.

- Proved that everyone, whatever speed we move at, measures the speed of light to be 300 million meters per second in a vacuum. This led to the strange new reality that time passes more slowly for people traveling at very high speeds compared with people moving more slowly.

- Discovered the hugely important and iconic equation E = mc2, which shows that energy and matter can be converted into one another.

- Rewrote the law of gravitation, which had been unchallenged since Isaac Newton published it in 1687. In his General Theory of Relativity, Einstein:

- Showed that matter causes space to curve, which produces gravity.

- Showed that light follows the path mapped out by the gravitational curve of space.

- Showed that time passes more slowly when gravity becomes very strong.

Became the 20th century’s most famous scientist when the strange predictions he made in his General Theory of Relativity were verified by scientific observations.

Spent his later years trying to find equations to unite quantum physics with general relativity. This was an incredibly hard task, and it has still not been achieved.

Einstein loved to be creative and innovative. He loathed the uncreative spirit in his school at Munich. His family’s business failed when he was aged 15, and they moved to Milan, Italy. Aged 16, he moved to Switzerland, where he finished high school.

In 1896 he enrolled for a science degree at the Swiss Federal Institute of Technology in Zurich. He didn’t like the teaching methods there, so he bunked classes to carry out experiments in the physics laboratory or play his violin. With the help of his classmates’ notes, he passed his exams; he graduated in 1900.

Einstein was not considered a good student by his teachers, and they refused to recommend him for further employment.

Mass Energy Equivalence

Einstein gave birth in 1905 to what has become the world’s most famous equation:

E = mc2

The equation says that mass (m) can be converted to energy (E). A little mass can make a lot of energy, because mass is multiplied by c2 where c is the speed of light, a very large number.

Mass energy equivalence

A small amount of mass can make a large amount of energy. Conversion of mass in atomic nuclei to energy is the principle behind nuclear weapons and is the sun’s source of energy.

The Photoelectric Effect

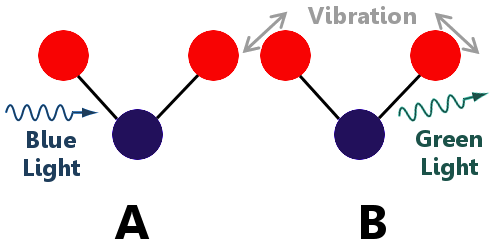

If you shine light on metal, the metal may release some of its electrons. Einstein said that light is made up of individual ‘particles’ of energy, which he called quanta. When these quanta hit the metal, they give their energy to electrons, which allows these electrons to escape from the metal.

Einstein showed that light can behave as a particle as well as a wave. The energy each ‘particle’ of light carries is proportional to the frequency of the light waves.

Einstein’s Special Theory of Relativity

In Einstein’s third paper of 1905 he returned to the big problem he had heard about at university – how to resolve Newton’s laws of motion with Maxwell’s equations of light. His approach was the ‘thought experiment.’ He imagined how the world would look if he could travel at the speed of light.

He realized that the laws of physics are the same everywhere, and regardless of what you did – whether you moved quickly toward a ray of light as it approached you, or quickly away from the ray of light – you would always see the light ray moving at the same speed – the speed of light!

This is not obvious, because it’s not how things work in everyday life, where, for example, if you move towards a child approaching you on a bike he will reach you sooner than if you move away from him. With light, it doesn’t matter whether you move towards or away from the light, it will take the same amount of time to reach you. This isn’t an easy thing to understand, so don’t worry about it if you don’t! (Unless you’re at university studying physics.) Every experiment ever done to test special relativity has confirmed what Einstein said.

If the speed of light is the same for all observers regardless of their speed, then it follows that some other strange things must be true. In fact, it turns out that time, length, and mass actually depend on the speed we are moving at. The nearer the speed of light we move, the bigger differences we seen in these quantities compared with someone moving more slowly. For example, time passes more and more slowly as we move faster and faster.

Working on the general theory of relativity, in 1911 he made his first predictions of how our sun’s powerful gravity would bend the path of light coming from other stars that passed close to the sun.

The General Theory of Relativity – Einstein Becomes Famous Worldwide mass curves space, resulting in gravity

A very, very rough approximation: the earth’s mass curves space. The moon’s speed keeps it rolling around the curve rather than falling to Earth. If you are on Earth and wish to leave, you need to climb out of the gravity well

Einstein published his general theory of relativity paper in 1915, showing, for example, how gravity distorts space and time. Light is deflected by powerful gravity, not because of its mass (light has no mass) but because gravity has curved the space that light travels through.

In 1919 a British expedition traveled to the West African island of Principe to observe an eclipse of the sun. During the eclipse they tested whether light from far away stars passing close to the sun was deflected. They found that it was! Just as Einstein had said, space truly is curved.

Isaac Newton is perhaps the greatest physicist who has ever lived. He and Albert Einstein are almost equally matched contenders for this title.

Each of these great scientists produced dramatic and startling transformations in the physical laws we believe our universe obeys, changing the way we understand and relate to the world around us.

Isaac Newton, who was largely self-taught in mathematics and physics:

- Generalized the binomial theorem

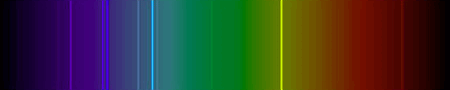

- Showed that sunlight is made up of all of the colors of the rainbow. He used one glass prism to split a beam of sunlight into its separate colors, then another prism to recombine the rainbow colors to make a beam of white light again.

- Built the world’s first working reflecting telescope.

- Discovered/invented calculus, the mathematics of change, without which we could not understand the behavior of objects as tiny as electrons or as large as galaxies.

- Wrote the Principia, one of the most important scientific books ever written; in it he used mathematics to explain gravity and motion. (Principia is pronounced with a hard c.)

- Discovered the law of universal gravitation, proving that the force holding the moon in orbit around the earth is the same force that causes an apple to fall from a tree.

- Formulated his three laws of motion – Newton’s Laws – which lie at the heart of the science of movement.

- Showed that Kepler’s laws of planetary motion are special cases of Newton’s universal gravitation.

- Proved that all objects moving through space under the influence of gravity must follow a path shaped in the form of one of the conic sections, such as a circle, an ellipse, or a parabola, hence explaining the paths all planets and comets follow.

- Showed that the tides are caused by gravitational interactions between the earth, the moon, and the sun.

- Predicted, correctly, that the earth is not perfectly spherical but is squashed into an oblate spheroid, larger around the equator than around the poles.

- Used mathematics to model the movement of fluids – from which the concept of a Newtonian fluid comes.

- Devised Newton’s Method for finding the roots of mathematical functions.

Newton was the first person to fully develop calculus. Calculus is the mathematics of change. Modern physics and physical chemistry would be impossible without it. Other academic disciplines such as biology and economics also rely heavily on calculus for analysis.

In his development of calculus Newton was influenced by Pierre de Fermat, who had shown specific examples in which calculus-like methods could be used. Newton was able to build on Fermat’s work and generalize calculus.

Newton’s famous apple, which he saw falling from a tree in the garden of his family home in Woolsthorpe-by-Colsterworth, is not a myth.

He told people that seeing the apple’s fall made him wonder why it fell in a straight line towards the center of our planet rather than moving upwards or sideways.

Newton discovered the equation that allows us to calculate the force of gravity between two objects.

Most people don’t like equations much: E = mc2 is as much as they can stand, but, for the record, here’s Newton’s equation:

Newton’s equation says that you can calculate the gravitational force attracting one object to another by multiplying the masses of the two objects by the gravitational constant and dividing by the square of the distance between the objects’ centers.

Dividing by distance squared means Newton’s Law is an inverse-square law.

Newton proved mathematically that any object moving in space affected by an inverse-square law will follow a path in the shape of one of the conic sections, the shapes which fascinated Archimedes and other Ancient Greek mathematicians.

For example, planets follow elliptical paths; while comets follow elliptical, or parabolic or hyperbolic paths.

And that’s it! Newton showed everyone how, if they wished to, they could calculate the force of gravity between things such as people, planets, stars, and apples.

Newton’s three laws of motion still lie at the heart of mechanics.

First law: Objects remain stationary or move at a constant velocity unless acted upon by an external force. This law was actually first stated by Galileo, whose influence Newton mentions several times in the Principia.

Second law: The force F on an object is equal to its mass m multiplied by its acceleration: F = ma.

Third law: When one object exerts a force on a second object, the second object exerts a force equal in size and opposite in direction on the first object.

Newton was not just clever with his mind. He was also skilled in experimental methods and working with equipment.

He built the world’s first reflecting telescope. This telescope focuses light from a curved mirror. Reflecting telescopes have several advantages over earlier telescopes including:

- They are cheaper to make.

- They are easier to make in large sizes, gathering more light, allowing higher magnification.

- They do not suffer from a focusing issue associated with lenses called chromatic aberration.

- Newton also used glass prisms to establish that white light is not a simple phenomenon. He proved that it is made up of all of the colors of the rainbow, which could recombine to form white light again.

J. J. Thomson took science to new heights with his 1897 discovery of the electron – the first subatomic particle.

He also found the first evidence that stable elements can exist as isotopes and invented one of the most powerful tools in analytical chemistry – the mass spectrometer.

Discovery of the Electron – The first subatomic particle

In 1834, Michael Faraday coined the word ion to describe charged particles which were attracted to positively or negatively charged electrodes. So, in Thomson’s time, it was already known that atoms are associated in some way with electric charges, and that atoms could exist in ionic forms, carrying positive or negative charges. For example, table salt is made of ionized sodium and chlorine atoms.

Na+: A sodium ion with a single positive charge

Cl–: A chloride ion with a single negative charge

In 1891, George Johnstone Stoney coined the word electron to represent the fundamental unit of electric charge. He did not, however, propose that the electron existed as a particle in its own right. He believed that it represented the smallest unit of charge an ionized atom could have.

Atoms were still regarded as indivisible.

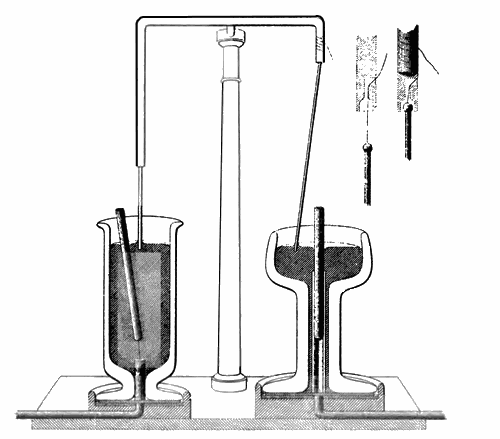

In 1897, age 40, Thomson carried out a now famous experiment with a cathode ray tube.

A cathode ray tube, similar to that used by J. J. Thomson. The air in the hollow glass tube is pumped out to create a vacuum. Electrons are produced at the cathode by a high voltage and travel through the vacuum, creating a green glow when they strike the glass at the end. Here a metal cross casts a shadow, establishing that the electrons are traveling in straight lines and cannot travel through metal.

When Thomson allowed his cathode rays to travel through air rather than the usual vacuum he was surprised at how far they could travel before they were stopped. This suggested to him that the particles within the cathode rays were many times smaller than scientists had estimated atoms to be.

So, cathode ray particles were smaller than atoms! What about their mass? Did they have a mass typical of, say, a hydrogen atom? – the smallest particle then known.

To estimate the mass of a cathode ray particle and discover whether its charge was positive or negative, Thomson deflected cathode rays with electric and magnetic fields to see the direction they were deflected in and how far they were pulled off course. He knew the size of the deflection would tell him about the particle’s mass and the direction of the deflection would tell him the charge the particles carried. He also estimated mass by measuring the amount of heat the particles generated when they hit a target.

Thomson used a cloud chamber to establish that a cathode ray particle carries the same amount of charge (i.e. one unit) as a hydrogen ion.

From these experiments he drew three revolutionary conclusions:

- Cathode ray particles are negatively charged.

- Cathode ray particles are at least a thousand times lighter than a hydrogen atom.

- Whatever source was used to generate them, all cathode ray particles are of identical mass and identical charge.

“As the cathode rays carry a charge of negative electricity, are deflected by an electrostatic force as if they were negatively electrified, and are acted on by a magnetic force in just the way in which this force would act on a negatively electrified body moving along the path of these rays, I can see no escape from the conclusion that they are charges of negative electricity carried by particles of matter.” —– J. J. THOMSON

Ernest Rutherford is the father of nuclear chemistry and nuclear physics. He discovered and named the atomic nucleus, the proton, the alpha particle, and the beta particle. He discovered the concept of nuclear half-lives and achieved the first deliberate transformation of one element into another, fulfilling one of the ancient passions of the alchemists.

Discovery of alpha and beta radiation

Starting in 1898 Rutherford studied the radiation emitted by uranium. He discovered two different types of radiation, which he named alpha and beta.

By allowing radiation from uranium to pass through an increasing number of layers of metal foil, he discovered that:

- beta particles have greater penetrating power than alpha rays

By the direction of their movement in a magnetic field, he deduced that:

- alpha particles are positively charged

By measuring the ratio of mass to charge, he formed the hypothesis that:

- alpha particles are helium ions carrying a 2+ charge

With his co-worker, Frederick Soddy, Rutherford came to the conclusion that:

- alpha particles are atomic in nature

- alpha particles are produced by the disintegration of larger atoms and so atoms are not, as everyone had believed, indestructible

- when large atoms emit alpha particles they become slightly smaller atoms, which means radioactive elements must change into other elements when they decay.

Rutherford coined the terms alpha, beta, and gamma for the three most common types of nuclear radiation. We still use these terms today. (Gamma radiation was discovered by Paul Villard in Paris, France in 1900.)

Rutherford began his investigation of alpha and beta radiation in the same year that Pierre and Marie Curie discovered the new radioactive elements polonium and radium.

“I have to keep going, as there are always people on my track. I have to publish my present work as rapidly as possible in order to keep in the race. The best sprinters in this road of investigation are Becquerel and the Curies.”

“I have to keep going, as there are always people on my track. I have to publish my present work as rapidly as possible in order to keep in the race. The best sprinters in this road of investigation are Becquerel and the Curies.”

The age of planet Earth and radiometric dating

Rutherford realized that Earth’s helium supply is largely produced by the decay of radioactive elements. He devised a method of dating rocks relating their age to the amount of helium present in them.

Based on the fact that our planet is still volcanically active, Lord Kelvin had indicated Earth’s age could be no greater than 400 million years old. He said Earth could be older than this only if some new source of energy could be found that was heating it internally.

Rutherford identified the new source – the energy released by radioactive decay of elements.

He also began the science of radiometric dating – using the products of radioactive decay to find out how old things are.

“Lord Kelvin had limited the age of the Earth, provided no new source (of energy) was discovered. That prophetic utterance refers to what we are now considering tonight, radium!”

“Lord Kelvin had limited the age of the Earth, provided no new source (of energy) was discovered. That prophetic utterance refers to what we are now considering tonight, radium!”

His new model required atoms to have a small, very dense core. With this step, guided by his experimental data, Rutherford had discovered the atomic nucleus.

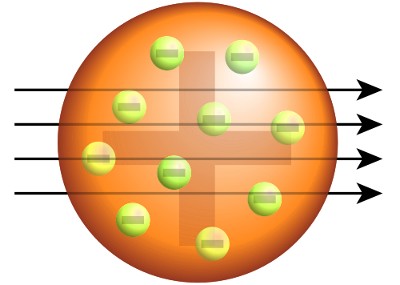

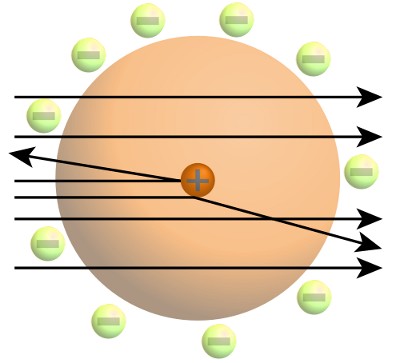

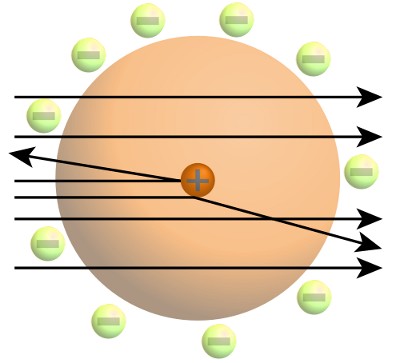

J. J. Thomson had modeled the atom as a sphere in which positive charge and mass were evenly spread. Electrons orbited within the positive sphere. This was called the plum pudding model.

The results of the gold foil experiment allowed Rutherford to build a more accurate model of the atom, in which nearly all of the mass was concentrated in a tiny, dense nucleus. Most of the atom’s volume was empty space. The nucleus was like a fly floating in a football stadium – remembering of course that the fly was much heavier than the stadium! Electrons orbited at some distance from the nucleus. This was called the Rutherford model. It resembles planets orbiting a star.

Although Rutherford had received a Nobel Prize for his earlier work, his discovery of the atomic nucleus was probably his greatest achievement.

John Dalton’s Atomic Theory laid the foundations of modern chemistry.

Atomic Theory

The Behavior of Gases

In 1801, Dalton gave a series of lectures in Manchester whose contents were published in 1802. In these lectures he presented research he had been carrying out into gases and liquids. This research was groundbreaking, offering great new insights into the nature of gases.

Firstly, Dalton stated correctly that he had no doubt that all gases could be liquefied provided their temperature was sufficiently low and pressure sufficiently high.

He then stated that when its volume is held constant in a container, the pressure of a gas varies in direct proportion to its temperature.

This was the first public statement of what eventually became known as Gay-Lussac’s Law, named after Joseph Gay-Lussac who published it in 1809.

In 1803, Dalton published his Law of Partial Pressures, still used by every university chemistry student, which states that in a mixture of non-reacting gases, the total gas pressure is equal to the sum of the partial pressures of the individual gases.

Dalton’s work distinguished him as a scientist of the first rank, and he was invited to give lectures at the Royal Institution in London.

Dalton and Atoms

His study of gases led Dalton to wonder about what these invisible substances were actually made of.

The idea of atoms had first been proposed more than 2000 years earlier by Democritus in Ancient Greece. Democritus believed that everything was made of tiny particles called atoms and that these atoms could not be split into smaller particles. Was Democritus right? Nobody knew!

Dalton was now going to solve this 2000-year-old mystery.

He carried out countless chemical reactions and in 1808 published what we now call Dalton’s Law in his book A New System of Chemical Philosophy.

If two elements form more than one compound between them, then the ratios of the masses of the second element which combine with a fixed mass of the first element will be ratios of small whole numbers.

For example, Dalton found that 12 grams of carbon could react with 16 grams of oxygen to form the compound we now call carbon monoxide.

He also found that that 12 grams of carbon could react with 32 grams of oxygen to form carbon dioxide.

This ratio of 32:16, which simplifies to 2:1, intrigued Dalton.

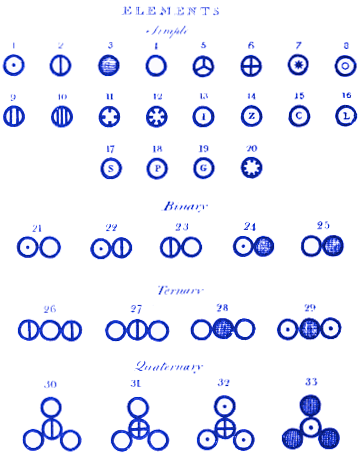

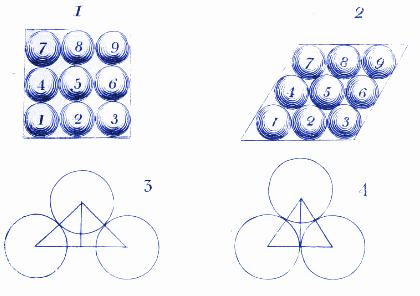

Analyzing all the data he collected, Dalton stated his belief that matter exists as atoms. He went further than Democritus, by stating that atoms of different elements have different masses. He also published diagrams showing, for example:

1. How atoms combine to form molecules

At the top of his diagram, Dalton assigns atom 1 to be hydrogen, 2 nitrogen, 3 carbon, 4 oxygen, 5 phosphorus, etc.

He then shows how molecules might look when the atoms combine to form compounds. For example, molecule 21 is water (OH), 22 is ammonia (NH) and 23 is nitrogen oxide (NO). Modern readers will see that Dalton got molecules 21 and 22 wrong. This is less important than the fact that Dalton’s system of atoms and molecules is almost identical to how we might represent them today. For example, Dalton’s molecule 28 is carbon dioxide. Today, we would still draw carbon dioxide in this way.

Amedeo Avogadro soon published work that built on Dalton’s and corrected some of Dalton’s errors – for example Avogadro said that water should be written H20. Unfortunately Avogadro’s work was ignored for many years, partly because it disagreed with Dalton’s.

2. How molecules of water might look in ice

Here Dalton shows how water molecules might arrange themselves when they are frozen in ice. We use similar diagrams today to show how atoms and molecules arrange themselves in crystals.

Dalton’s Atomic Theory states that:

1. The elements are made of atoms, which are tiny particles, too small to see.

2. All atoms of a particular element are identical.

3. Atoms of different elements have different properties: their masses are different, and their chemical reactions are different.

4. Atoms cannot be created, destroyed, or split.

5. In a chemical reaction, atoms link to one another, or separate from one another.

6. Atoms combine in simple whole-number ratios to form compounds.

Although we have learned that atoms of the same element can have different masses (isotopes), and can be split in nuclear reactions, most of Dalton’s Atomic Theory holds good today, over 200 years after he described it. It is the foundation modern chemistry was built upon.

James Chadwick discovered the neutron in 1932 and was awarded the Nobel Prize for Physics in 1935.

The Nucleus, and the Neutron

In 1923, aged 32, Chadwick became Rutherford’s Assistant Director of Research in the Cavendish Laboratory where he continued to study the atomic nucleus.

In those days, most researchers believed there were electrons within the nucleus as well as outside it. For example, the nucleus of a carbon atom was thought to contain 12 protons and 6 electrons, giving it an electric charge of +6. Orbiting the nucleus were supposed to be another 6 electrons causing the atom’s overall electric charge to be 0.

Rutherford, Chadwick, and some others believed in the possibility that particles with no charge could be in the nucleus.

In his spare time, through the 1920s, Chadwick made a variety of attempts in the laboratory to find these neutral particles, but without success. He was, however, increasingly convinced in the existence of a neutral particle – the neutron. He couldn’t, however, get the evidence he needed to prove its existence.

Then, at the beginning of 1932, Chadwick learned of work that Frederic and Irene Joliot-Curie had just done in Paris. The Joliot-Curies believed they had managed to eject protons from a sample of wax using gamma rays. This did not make sense to Chadwick, who thought gamma rays were not powerful enough to do this. However, the evidence that protons had been hit with sufficient energy to eject them was convincing.

The gamma ray source had been the radioactive element polonium. Chadwick drew the conclusion that the protons had actually been hit by the particle he was looking for: the neutron.

Feverishly, he began working in the Cavendish laboratory. Using polonium as a source of (what he believed were) neutrons, he bombarded wax. Protons were released by the wax and Chadwick made measurements of the protons’ behavior.

The protons behaved in exactly the manner they ought to if they had been hit by electrically neutral particles with a mass similar to the proton. Chadwick had discovered the neutron.

Within two weeks he had written to the prestigious science journal Nature to announce the Possible Existence of a Neutron.

Chadwick did not think he had discovered a new elementary particle. He believed the neutron was a complex particle consisting of a proton and an electron. The German physicist Werner Heisenberg showed that the neutron could not be an electron-proton pair, and was actually a new elementary particle.

In 1935, James Chadwick received the Nobel Prize in Physics for his discovery of the neutron.

An image from an expansion chamber in Chadwick’s laboratory. A neutron collides with an atom of nitrogen-14. The nitrogen atom splits into boron-11 and helium-4.

And what of the Joliot-Curies? If only they had interpreted their results correctly, they might have discovered the neutron themselves. In fact, Frederic and Irene Joliot-Curie also received a Nobel Prize in 1935, for chemistry: they were the first people to create new, synthetic, radioactive elements.

“I have already mentioned Rutherford’s suggestion that there might exist a neutral particle formed by the close combination of a proton and an electron, and it was at first natural to suppose that the neutron might be such a complex particle. On the other hand, a structure of this kind cannot be fitted into the scheme of the quantum mechanics,… the statistics and spins of the lighter elements can only be given a consistent description if we assume that the neutron is an elementary particle.”

“I have already mentioned Rutherford’s suggestion that there might exist a neutral particle formed by the close combination of a proton and an electron, and it was at first natural to suppose that the neutron might be such a complex particle. On the other hand, a structure of this kind cannot be fitted into the scheme of the quantum mechanics,… the statistics and spins of the lighter elements can only be given a consistent description if we assume that the neutron is an elementary particle.”

JAMES CHADWICK, 1935

New Elements and Nuclear Reactions

The discovery of the neutron dramatically changed the course of science, because neutrons could be collided with atomic nuclei. Some of the neutrons would imbed in a nucleus, increasing its mass. Natural Beta decay (the emission of an electron from an atom’s nucleus) would then convert the neutron into a proton. Since an element is defined by the number of protons it has (hydrogen has 1, helium 2, lithium 3, beryllium 4, boron 5, carbon 6, nitrogen 7, oxygen 8, etc, etc) this enabled scientists to make new, heavier elements in the laboratory.

It also meant neutrons could be utilized to split heavy atoms in a process known as atomic fission, producing a large amount of energy which could be used in atomic bombs or nuclear power plants.

World War 2 and the Atomic Bomb

In 1939, the first year of World War 2, Chadwick was asked by the British Government about building an atomic bomb. He said it was possible, but would not be easy.

Preliminary research began in a number of universities. Working conditions in Chadwick’s laboratory were arduous. The neighborhood in Liverpool was frequently attacked in air-raids by the German Air Force. Despite the bombing, by spring 1941, Chadwick’s research group had discovered that the critical mass of uranium-235 for a nuclear detonation was about 8 kilograms.

Wartime Liverpool after a German air-raid. Chadwick’s laboratory often shook from bomb blasts in the neighborhood.

Chadwick wrote a summary report in summer 1941 of all the atomic bomb work carried out in British universities. In the USA, President Roosevelt read the report in the fall of 1941, and the USA started to pour millions of dollars into its own atomic bomb research. When he met them, Chadwick told American representatives he was 90 percent sure the bomb would work.

In late 1943, Chadwick traveled to the USA to see the Manhattan Project’s facilities. He was one of only three men in the world to enjoy access to all of America’s research, data, and production plants for the bomb: the other two were American Major General Leslie Groves, the Manhattan Project’s Director, and Groves’ second in command, Major General Thomas Francis Farrell.

Early in 1944, Chadwick, his wife, and children, moved to Los Alamos, the main research center for the Manhattan Project.

Chadwick was present when the US and UK governments agreed that the bomb could be used against Japan. He then attended the Trinity nuclear test on July 16, 1945, when the world’s first atomic bomb was detonated.

In 1945, the British Government knighted him for his wartime contribution, and he became Sir James Chadwick. The U.S. Government awarded him the Medal of Merit in 1946.

Charles-Augustin de Coulomb formulated his law as a consequence of his efforts to study the law of electrical repulsions put forward by English scientist Joseph Priestley. In the process, he devised sensitive apparatus to evaluate the electrical forces related to the Priestley’s law. Coulomb issued out his theories in 1785–89.

He also developed the inverse square law of attraction and repulsion of unlike and like magnetic poles. This laid out the foundation for the mathematical theory of magnetic forces formulated by French mathematician Siméon-Denis Poisson. Coulomb extensively worked on friction of machinery, the elasticity of metal and silk fibers and windmills. The coulomb, SI unit of electric charge, was named after him.

Georg Simon Ohm was a German physicist, best known for his “Ohm’s Law”, which states that the current flow through a conductor is directly proportional to the potential difference (voltage) and inversely proportional to the resistance. The physical unit of electrical resistance, the Ohm (symbol: Ω), was named after him.

While teaching in Cologne, Ohm started passionately working on the conductivity of metals and the behavior of electrical circuits.

After extensive research, he wrote “Die Galvanische Kette, Mathematisch Bearbeitet” (The Galvanic Circuit Investigated Mathematically) in 1827, which formulated the relationship between voltage (potential difference), current and resistance in an electrical circuit:

I = V / R

The unit of current is the ampere (I); that of potential difference is the volt (V); and that of resistance is the ohm (Ω). This equation, as defined using the unit of resistance above, was not formalized until the 1860’s.

Ohms Law states that the current flow through a conductor is directly proportional to the potential difference (voltage) and inversely proportional to the resistance.

After initial criticism, most particularly by Georg Hegel, the noted creator of German Idealism, who rejected the authenticity of the experimental approach of Ohm, the “glory” finally came in 1841 when the Royal Society of London honored him with the Copley Medal for his extraordinary efforts. Several German scholars, including an adviser to the State on the development of telegraph, also recognized Ohm’s work a few months later.

The pertinence of Ohm’s Law was eventually recognized. The law still remains the most widely used and appreciated of all the rules relating to the behavior of electrical circuits.

Awards:

He received the Royal Society Copley medal in 1841.

Georg Simon Ohm was made a foreign member of the Royal Society in 1842, and became a full member of the Bavarian Academy of Sciences and Humanities in 1845.

Michael Faraday, who came from a very poor family, became one of the greatest scientists in history. His achievement was remarkable in a time when science was usually the preserve of people born into wealthy families. The unit of electrical capacitance is named the farad in his honor, with the symbol F.

Michael Faraday was eager to learn more about the world – he did not restrict himself to binding the shop’s books. After working hard each day, he spent his free time reading the books he had bound.

Gradually, he found he was reading more and more about science. Two books in particular captivated him:

- The Encyclopedia Britannica – his source for electrical knowledge and much more

- Conversations on Chemistry – 600 pages of chemistry for ordinary people written by Jane Marcet

He became so fascinated that he started spending part of his meager pay on chemicals and apparatus to confirm the truth of what he was reading.

As he learned more about science, he heard that the well-known scientist John Tatum was going to give a series of public lectures on natural philosophy (physics). To attend the lectures the fee would be one shilling – too much for Michael Faraday. His older brother, a blacksmith, impressed by his brother’s growing devotion to science, gave him the shilling he needed.

It is worth saying that the parallels in the lives of Michael Faraday and Joseph Henry are rather striking. Both were born in poverty; had fathers who often could not work because of ill-health; became apprentices; were inspired to become scientists by reading particular books; were devoutly religious; became laboratory assistants; their greatest contributions were made in the same scientific era in the field of electrical science; and both have an SI unit named in their honor.

Introduction to Humphry Davy and More Science

Faraday’s education took another step upward when William Dance, a customer of the bookshop, asked if he would like tickets to hear Sir Humphry Davy lecturing at the Royal Institution.

Sir Humphry Davy was one of the most famous scientists in the world. Faraday jumped at the chance and attended four lectures about one of the newest problems in chemistry – defining acidity. He watched Davy perform experiments at the lectures.

This was the world he wanted to live in, he told himself. He took notes and then made so many additions to the notes that he produced a 300 page handwritten book, which he bound and sent to Davy as a tribute.

An 1802 drawing by James Gillray of another exciting science lecture at the Royal Institution! Humphry Davy is the dark-haired man holding the gas bag.

At this time Faraday had begun more sophisticated experiments at the back of the bookshop, building an electric battery using copper coins and zinc discs separated by moist, salty paper. He used his battery to decompose chemicals such as magnesium sulfate. This was the type of chemistry Humphry Davy had pioneered.

In October 1812 Faraday’s apprenticeship ended, and he began work as a bookbinder with a new employer, whom he found unpleasant.

Others’ Misfortunes Help Faraday

And then there was a fortunate (for Faraday) accident. Sir Humphry Davy was hurt in an explosion when an experiment went wrong: this temporarily affected his ability to write. Faraday managed to get work for a few days taking notes for Davy, who had been impressed by the book Faraday had sent him. There were some advantages to being a bookbinder after all!

When his short time as Davy’s note-taker ended, Faraday sent a note to Davy, asking if he might be employed as his assistant. Soon after this, one of Davy’s laboratory assistants was fired for misconduct, and Davy sent a message to Faraday asking him if he would like the job of chemical assistant.

Michael Faraday’s Career at the Royal Institution

Faraday began work at the Royal Institution of Great Britain at the age of 21 on March 1, 1813.

His salary was good, and he was given a room in the Royal Institution’s attic to live in. He was very happy with the way things had turned out.

He was destined to be associated with the Royal Institution for 54 years, ending up as a Professor of Chemistry. His first job was as a chemical assistant, preparing apparatus for experiments and lectures. This involved working with nitrogen trichloride, the explosive which had already injured Davy. Faraday himself was knocked unconscious briefly by another nitrogen chloride explosion, and then Davy was injured again, finally putting to an end to work with that particular substance.

After just seven months at the Royal Institution, Davy took Faraday as his secretary on a tour of Europe that lasted 18 months.

In 1816, aged 24, Faraday gave his first ever lecture, on the properties of matter, to the City Philosophical Society. And he published his first ever academic paper, discussing his analysis of calcium hydroxide, in the Quarterly Journal of Science.

In 1821, aged 29, he was promoted to be Superintendent of House and Laboratory of the Royal Institution. He also married Sarah Barnard. He and his bride lived in rooms in the Royal Institution for most of the next 46 years: no longer in attic rooms, they lived in a comfortable suite Humphry Davy himself had once lived in.

In 1824, aged 32, he was elected to the Royal Society. This was recognition that he had become a notable scientist in his own right.

In 1825, aged 33, he became Director of the Royal Institution’s Laboratory.

In 1833, aged 41, he became Fullerian Professor of Chemistry at the Royal Institution of Great Britain. He held this position for the rest of his life.

In 1848, aged 54, and again in 1858 he was offered the Presidency of the Royal Society, but he turned it down.

Michael Faraday’s Scientific Achievements and Discoveries

1821: Discovery of Electromagnetic Rotation

This is a glimpse of what would eventually develop into the electric motor, based on Hans Christian Oersted’s discovery that a wire carrying electric current has magnetic properties.

Faraday’s electromagnetic rotation apparatus. Electricity flows through the wires. The liquid in the cups is mercury, a good conductor of electricity. In the cup on the right, the metal wire continuously rotates around the central magnet as long as electric current is flowing through the circuit.

1823: Gas Liquefaction and Refrigeration

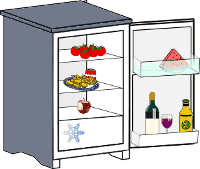

In 1802 John Dalton had stated his belief that all gases could be liquefied by the use of low temperatures and/or high pressures. Faraday provided hard evidence for Dalton’s belief when he used high pressures to produce the first ever liquid samples of chlorine and ammonia.

Showing that ammonia could be liquefied under pressure, then evaporated to cause cooling, led to commercial refrigeration.

The importance of Faraday’s discovery was that he had shown that mechanical pumps could transform a gas at room temperature into a liquid. The liquid could then be evaporated, cooling its surroundings and the resulting gas could be collected and compressed by a pump into a liquid again, then the whole cycle could be repeated. This is the basis of how modern refrigerators and freezers work.

In 1862 Ferdinand Carré demonstrated the world’s first commercial ice-making machine at the Universal London Exhibition. The machine used ammonia as its coolant and produced ice at the rate of 200 kg per hour.

1825: Discovery of Benzene

Historically, benzene is one of the most important substances in chemistry, both in a practical sense – i.e. making new materials; and in a theoretical sense – i.e. understanding chemical bonding. Michael Faraday discovered benzene in the oily residue left behind from producing gas for lighting in London.

A model of a benzene molecule.

1831: Discovery of Electromagnetic Induction

This was an enormously important discovery for the future of both science and technology. Faraday discovered that a varying magnetic field causes electricity to flow in an electric circuit.

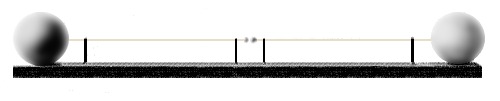

Moving the magnet causes a current to flow. You need a sensitive ammeter to observe the tiny current caused by a small magnet. The stronger the magnet, the bigger the current. Pushing a bar magnet into a coil of wire can generate a larger current.

For example, moving a horseshoe magnet over a wire produces an electric current, because the movement of the magnet causes a varying magnetic field.

Previously, people had only been able to produce electric current with a battery. Now Faraday had shown that movement could be turned into electricity – or in more scientific language, kinetic energy could be converted to electrical energy.

Most of the power in our homes today is produced using this principle. Rotation (kinetic energy) is converted into electricity using electromagnetic induction. The rotation can be produced by high pressure steam from coal, gas, or nuclear energy turning turbines; or by hydroelectric plants; or by wind-turbines, for example.

1834: Faraday’s Laws of Electrolysis

Faraday was one of the major players in the founding of the new science of electrochemistry, which studies events at the interfaces of electrodes with ionic substances. Electrochemistry is the science that has produced the Li ion batteries and metal hydride batteries capable of powering modern mobile technology. Faraday’s laws are vital to our understanding of batteries and electrode reactions.

1836: Invention of the Faraday Cage

Faraday discovered that when any electric conductor becomes charged, all the extra charge sits on the outside of the conductor. This means that the extra charge does not appear on the inside of a room or cage made of metal.

The image at the top of this page shows a man wearing a Faraday Suit, which has a metallic lining, keeping him safe from the electricity outside his suit.

In addition to offering protection for people, sensitive electrical or electrochemical experiments can be placed inside a Faraday Cage to prevent interference from external electrical activity.

Faraday cages can also create dead zones for mobile communications.

1845: Discovery of the Faraday Effect – a magneto-optical effect

This was another vital experiment in the history of science, the first to link electromagnetism and light – a link finally described fully by James Clerk Maxwell’s equations in 1864, which established that light is an electromagnetic wave.

Faraday discovered that a magnetic field causes the plane of light polarization to rotate.

When the contrary magnetic poles were on the same side, there was an effect produced on the polarized ray, and thus magnetic force and light were proved to have relation to each other…

When the contrary magnetic poles were on the same side, there was an effect produced on the polarized ray, and thus magnetic force and light were proved to have relation to each other…

1845: Discovery of Diamagnetism as a Property of all Matter

Most people are familiar with ferromagnetism – the type shown by normal magnets.

The frog is slightly diamagnetic. The diamagnetism opposes a magnetic field – in this case a very strong magnetic field – and the frog floats because of magnetic repulsion. Image by Lijnis Nelemans, High Field Magnet Laboratory, Radboud University Nijmegen.

Faraday discovered that all substances are diamagnetic – most are weakly so, some are strongly so.

Diamagnetism opposes the direction of an applied magnetic field.

For example, if you held the north pole of a magnet near a strongly diamagnetic substance, this substance would be pushed away by the magnet.

Diamagnetism in materials, induced by very strong modern magnets, can be used to produce levitation. Even living things, such as frogs, are diamagnetic – and can be levitated in a strong magnetic field.

Thomas Alva Edison is one of the greatest American inventors who held countless patents, majority of them related to electricity and power. While two of his most famous inventions are the incandescent lamp and the phonograph, arguably the most significant invention of Edison is considered to be large-scale organized research.

Probably his most impressive invention, the phonograph, for the mechanical recording and reproduction of sound, was patented in 1877. The Edison Speaking Phonograph Company was established in early 1878, to exploit the new machine. Edison received $10,000 for the manufacturing and sales rights and 20% of the profits; by 1890 he held over 80 phonograph patents.

To explore incandescence, Edison and his fellows, among them J. P. Morgan, developed the Edison Electric Light Company in 1878. Years later, the company became the General Electric Company. Edison invented the first practical incandescent lamp in 1879. With months of hard work researching metal filaments, Edison and his staff analyzed 6,000 organic fibers around the world and determined that the Japanese bamboo was ideal for mass production. Large scale production of these cheap lamps turned out to be profitable. He later patented the first fluorescent lamp in 1896.

In 1882 Edison made an amazing scientific discovery termed the Edison Effect. He discovered that in a vacuum, electrons flowed from a heated element (such as an incandescent filament) to a cooler metal plate. The electrons flow only one way, from the hot element to the cool plate, like a diode. This effect is now called “thermionic emission.”

A method to transmit telegraphic “aerial” signals over short distances was patented by Edison in 1885. The “wireless” patent was later sold to Guglielmo Marconi.

Edison established the huge West Orange, N.J., factory in 1887 and supervised it until 1931. This was probably the world’s most cutting-edge research laboratory, and a forerunner to modern research and development laboratories, with experts systematically investigating and researching for the solution to problems.

The Edison battery, developed in 1910, used an alkaline electrolyte, and proved to be a superb storage device. He enhanced the copper oxide battery, strikingly similar to modern dry cells, in 1902.

He operated his first commercial electric power station in London in 1882, and America’s first electric station opened in New York City later that same year using a DC supply system.

The kinetograph, his motion picture camera, photographed action on 50-foot strips of film, and produced sixteen images per foot. Edison built a small movie production studio in 1893 called the “Black Maria,” which made the first Edison movies. The kinetoscope projector of 1893 displayed the films. The earliest commercial movie theater, a peepshow, was established in New York in 1884. After developing and modifying the projector of Thomas Armat in 1895, Edison commercialized it as the “Vitascope”.

The Edison Company created over 1,700 movies. Edison set the benchmark for talking pictures in 1904 by synchronizing movies with the phonograph. His cinemaphone adjusted the film speed to the phonograph speed. The kinetophone projected talking pictures in 1913. The phonograph, behind the screen, was synchronized by pulleys and ropes with the projector. Edison brought forth many “talkies.”

The universal motor, which utilized alternating or direct current, was devised in 1907. The electric safety lantern, patented in 1914, significantly reduced casualties among miners. The same year Edison devised the telescribe, which unified characteristics of the telephone and dictating phonograph.

Edison presided on the U.S. Navy Consulting Board throughout World War I and developed 45 inventions towards the war effort. These inventions included substitutes for antecedently imported chemicals (such as carbolic acid), a ship-telephone system, an underwater searchlight and defensive instruments against U-boats. Later on, Edison launched the Naval Research Laboratory, the eminent American institution for organized research involving weapons.

Whenever we study or talk about radioactivity, the name Henri Becquerel at once enters our minds. He was the discoverer of radioactivity, for which he was awarded the 1903 Nobel Prize, sharing the award with Pierre and Marie Curie.

Radioactivity Studies:

Becquerel decided to investigate whether there was any connection between X-rays and naturally occurring phosphorescence. The glow of X-ray emission put Becquerel in mind of the light in his doctorate study although he had not done as much active research in the last few years. He had inherited from his father a supply of uranium salts, which displayed phosphoresce when exposed to light. When the salts were placed near to a photographic plate covered with opaque paper, the plate was discovered to be fogged.

The phenomenon was found to be common to all the uranium salts studied and it was concluded to be a property of the uranium atom. Finally Becquerel showed that the rays emitted by uranium caused gases to ionize and that they differed from X-rays in that they could be deflected by electric or magnetic fields. In this way his spontaneous discovery of radioactivity occurred.

Nowadays it is generally considered that Becquerel discovered radioactivity by serendipity. It is also known that Becquerel discovered one type of radioactivity, beta particles, which are high-speed electrons leaving the nucleus of the atom. He demonstrated in 1899 that beta particles were the same as the recently identified electron.

From handling radioactive stones he noted that he developed recurring burns on his skin, which led ultimately to the use of radioactivity in medicine.

The unit of radioactivity, the becquerel (symbol: Bq) is named in his honor.

Other Studies:

Becquerel also authored detailed studies of the physical properties of cobalt, nickel, and ozone. He studied how crystals absorb light and researched the polarization of light.

Besides being a Nobel Laureate, Becquerel was elected a member of the Academe des Sciences de France and succeeded Berthelot as Life Secretary of that body. He was a member also of the Accademia dei Lincei and of the Royal Academy of Berlin, amongst others. He was also made an Officer of the Legion of Honor. Becquerel published his findings in many papers, principally in the Annales de Physique et de Chimie and the Comptes Rendus de l’Academie des Sciences.

Marie Curie discovered two new chemical elements – radium and polonium. She carried out the first research into the treatment of tumors with radiation, and she founded of the Curie Institutes, which are important medical research centers.

She is the only person who has ever won Nobel Prizes in both physics and chemistry.

Marie Curie’s Scientific Discoveries

The Ph.D. degree is a research based degree, and Marie Curie now began investigating the chemical element uranium.

Why Uranium?

In 1895, Wilhelm Roentgen had discovered mysterious X-rays, which could capture photographs of human bones beneath skin and muscle.

The following year, Henri Becquerel had discovered that rays emitted by uranium could pass through metal, but Becquerel’s rays were not X-rays.

Marie decided to investigate the rays from uranium – this was a new and very exciting field to work in. Discoveries came to her thick and fast. She discovered that:

- Uranium rays electrically charge the air they pass through. Such air can conduct electricity. Marie detected this using an electrometer Pierre and his brother invented.

- The number of rays coming from uranium depends only on the amount of uranium present – not the chemical form of the uranium. From this she theorized correctly that the rays came from within the uranium atoms and not from a chemical reaction.

- The uranium minerals pitchblende and torbernite have more of an effect on the conductivity of air than pure uranium does. She theorized correctly that these minerals must contain another chemical element, more active than uranium.

- The chemical element thorium emits rays in the same way as uranium. (Gerhard Carl Schmidt in Germany actually discovered this a few weeks before Marie Curie in 1898: she discovered it independently.)

By the summer of 1898, Marie’s husband Pierre had become as excited about her discoveries as Marie herself. He asked Marie if he could cooperate with her scientifically, and she welcomed him. By this time, they had a one-year old daughter Irene. Amazingly, 37 years later, Irene Curie herself would win the Nobel Prize in Chemistry.

“My husband and I were so closely united by our affection and our common work that we passed nearly all of our time together.”

“My husband and I were so closely united by our affection and our common work that we passed nearly all of our time together.”

Discovery of Polonium and Radium, and Coining a New Word

Marie and Pierre decided to hunt for the new element they suspected might be present in pitchblende. By the end of 1898, after laboriously processing tons of pitchblende, they announced the discovery of two new chemical elements which would soon take their place in Dmitri Mendeleev’s periodic table.

The first element they discovered was polonium, named by Marie to honor her homeland. They found polonium was 300 times more radioactive that uranium. They wrote:

“We thus believe that the substance that we have extracted from pitchblende contains a metal never known before, akin to bismuth in its analytic properties. If the existence of this new metal is confirmed, we suggest that it should be called polonium after the name of the country of origin of one of us.”

The second element the couple discovered was radium, which they named after the Latin word for ray. The Curies found radium is several million times more radioactive than uranium! They also found radium’s compounds are luminous and that radium is a source of heat, which it produces continuously without any chemical reaction taking place. Radium is always hotter than its surroundings.

Together they came up with a new word for the phenomenon they were observing: radioactivity. Radioactivity is produced by radioactive elements such as uranium, thorium, polonium and radium.

A Ph.D. and a Nobel Prize in Physics!

In June 1903, Marie Curie was awarded her Ph.D. by the Sorbonne.

Marie Curie in 1903 – her Nobel Prize photo.

Her examiners were of the view that she had made the greatest contribution to science ever found in a Ph.D. thesis.

Six months later, the newly qualified researcher was awarded the Nobel Prize in Physics!

She shared the prize with Pierre Curie and Henri Becquerel, the original discover of radioactivity.

The Nobel Committee were at first only going to give prizes to Pierre Curie and Henri Becquerel.

However, Pierre insisted that Marie must be honored.

So three people shared the prize for discoveries in the scientific field of radiation.

Marie Curie was the first woman to be awarded a Nobel Prize

Marie Curie Theorizes Correctly About Radioactivity

“Consequently the atom of radium would be in a process of evolution, and we should be forced to abandon the theory of the invariability of atoms, which is at the foundation of modern chemistry.

“Consequently the atom of radium would be in a process of evolution, and we should be forced to abandon the theory of the invariability of atoms, which is at the foundation of modern chemistry.

Moreover, we have seen that radium acts as though it shot out into space a shower of projectiles, some of which have the dimensions of atoms, while others can only be very small fractions of atoms. If this image corresponds to a reality, it follows necessarily that the atom of radium breaks up into subatoms of different sizes, unless these projectiles come from the atoms of the surrounding gas, disintegrated by the action of radium; but this view would likewise lead us to believe that the stability of atoms is not absolute.”

Nobel Prize for Chemistry

In 1910, Marie isolated a pure sample of the metallic element radium for the first time. She had discovered the element 12 years earlier.

In 1911, she was awarded the Nobel Prize for Chemistry for the “discovery of the elements radium and polonium, the isolation of radium and the study of the nature and compounds of this remarkable element.”

Again, Marie Curie had broken the mold: she was the first person to win a Nobel Prize in both physics and chemistry. In fact, she is the only person ever to have done this.

The Coming of War – Helping the Wounded

During World War 1, 1914 – 1918, Marie Curie put her scientific knowledge to use. With the help of her daughter Irene, who was only 17 years old, she set up radiology medical units near battle lines to allow X-rays to be taken of wounded soldiers. By the end of the war, over one million injured soldiers had passed through her radiology units.

One of the Greats

Marie Curie was now recognized worldwide as one of science’s “greats.” She traveled widely to talk about science and promote The Radium Institute, which she founded to carry out medical research.

Marie was one of the small number of elite scientists invited to one of the most famous scientific conferences of all-time – the 1927 Solvay Conference on Electrons and Photons.

Marie Curie, aged 59, at the 1927 Solvay Conference on Electrons and Photons. This was an invitation-only meeting of the world’s greatest minds in chemistry and physics. In the front row are Max Planck, Marie Curie, Hendrik Lorentz and Albert Einstein. In the row behind are Martin Knudsen, Lawrence Bragg, Hendrik Kramers, Paul Dirac and Arthur Compton. All except Knudsen and Kramers were Nobel Prize winners.

Healing the World – The Radium Institute

Marie Curie became aware that the rays coming from radioactive elements could be used to treat tumors. She and Pierre decided not to patent the medical applications of radium, and so could not profit from it.

Max Planck changed physics and our understanding of the world forever when he discovered that hot objects do not radiate a smooth, continuous range of energies as had been assumed in classical physics. Instead, he found that the energies radiated by hot objects have distinct values, with all other values forbidden. This discovery was the beginning of quantum theory – an entirely new type of physics – which replaced classical physics for atomic scale events.

Quantum theory revolutionized our understanding of atomic and subatomic processes, just as Albert Einstein’s theories of relativity revolutionized our understanding of gravity, space, and time. Together these theories constitute the most spectacular breakthroughs of twentieth-century physics.

Max Planck’s Contributions to Science

Most theoretical physicists make their mark when they are young. Max Planck was 42 when he finally left an indelible mark on the world.

The problem he solved in 1900 was prompted by puzzlement over the electromagnetic spectrum emitted by hot objects.

Classical Physics Disagrees with Reality

When things get hot they radiate energy. For example, if you were to observe a blacksmith heating a horseshoe, you’d notice that when the shoe gets hot it glows a red color, and when it gets even hotter it glows white.

Hot metal glows, emitting electromagnetic radiation.

Physicists considered the case of a black body – a body which absorbs all electromagnetic radiation that falls on it. When it is heated, a black body radiates energy in the form of electromagnetic waves. These waves have a broad range of wavelengths such as visible, ultraviolet, and infrared light.

BUT, in the 1800s people noticed the colors of light radiated in experiments did not agree with those predicted by theory. In scientific language, there was a mismatch between the wavelengths radiated by hot objects and the wavelengths predicted by classical theories of thermodynamics.

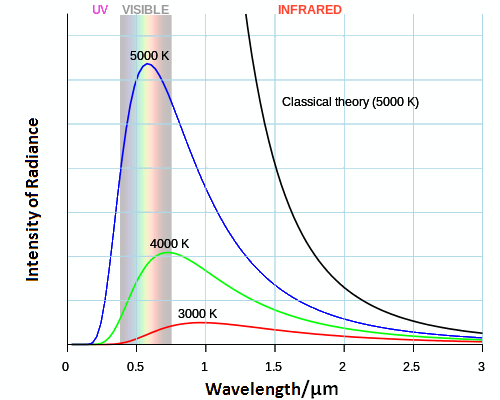

The graph below shows the problem. The black curve shows the predicted behavior of a black body at a temperature of 5000 K. The blue line shows the actual behavior.

Black-body Radiation Intensity vs Wavelength

Compare the curve expected from classical thermodynamic theory at a temperature of 5000 K (black line) versus that observed in experiments (blue line). They are very different! Also shown in green and red are curves at somewhat lower temperatures.

Quantum Theory

In order to match theory with observations Planck made a revolutionary proposal. If you’re not already familiar with quantum theory, to understand what he proposed, it might help to think about a times table – for example the three times table – 3, 6, 9, 12, 15… in which only numbers divisible by 3 are allowed and all other numbers are forbidden.

Planck’s idea was that energy is emitted in a similar manner. He proposed that only certain amounts of energy could be emitted – i.e. quanta. Classical physics held that all values of energy were possible.

This was the birth of quantum theory. Planck found that his new theory, based on quanta of energy, accurately predicted the wavelengths of light radiated by a black body.

Planck found the energy carried by electromagnetic radiation must be divisible by a number now called Planck’s constant, represented by the letter h. Energy could then be calculated from the equation:

where E is energy, h is Planck’s constant, and ν is the frequency of the electromagnetic radiation. Planck’s constant is a very, very small quantity indeed. Its small size explains why the experimentalists of the time had not realized that electromagnetic energy is quantized. To four significant figures, Planck’s constant is 6.626 x 10-34 J s.

Planck had not intended to overthrow classical physics. His intention was to find a theory that matched experimental observations. Nevertheless, the implications of his discovery were momentous. Quantum theory – the realization that nature has ‘allowed’ and ‘forbidden’ states – had been born and the way we interpret nature would never be the same again.

Planck was awarded the 1918 Nobel Prize in Physics for:

“the services he rendered to the advancement of Physics by his discovery of energy quanta.”

Planck himself would later write:

“…it seemed so incompatible with the traditional view of the universe provided by Physics that it eventually destroyed the framework of this older view. For a time it seemed that a complete collapse of classical Physics was not beyond the bounds of possibility; gradually, however, it appeared, as had been confidently expected by all who believed in the steady advance of science, that the introduction of Quantum Theory led not to the destruction of Physics, but to a somewhat profound reconstruction…”

“…it seemed so incompatible with the traditional view of the universe provided by Physics that it eventually destroyed the framework of this older view. For a time it seemed that a complete collapse of classical Physics was not beyond the bounds of possibility; gradually, however, it appeared, as had been confidently expected by all who believed in the steady advance of science, that the introduction of Quantum Theory led not to the destruction of Physics, but to a somewhat profound reconstruction…”

The Planck Scale

The Planck Scale was born in 1899. It replaced the Earth-centered measurement system of:

- a kilogram – the mass of a liter of water

- a meter – one ten-millionth of the distance from the North Pole to the Equator

- a second – 1⁄86400 of an Earth day

with new, universal units based on:

- the speed of light

- the Planck constant

- the gravitational constant

In the following century Stephen Hawking discovered that the Planck scale really does measure something fundamental about nature, showing that the smallest possible black hole has a mass of 1 Planck mass unit, a Schwarzschild radius of 1 Planck length unit, and a half-life of 1 Planck time unit.

Moreover, Jacob Bekenstein found that when any black hole takes in a single elementary particle containing 1 bit of information the area of the event horizon increases 1 square Planck length, revealing a remarkable link between the Planck scale and information.

“The laws of Physics have no consideration for the human senses; they depend on the facts, and not upon the obviousness of the facts.”

In a series of brilliant experiments Heinrich Hertz discovered radio waves and established that James Clerk Maxwell’s theory of electromagnetism is correct.

Hertz also discovered the photoelectric effect, providing one of the first clues to the existence of the quantum world. The unit of frequency, the hertz, is named in his honor.

He personally designed experiments which he thought would answer Helmholtz’s question. He began to really enjoy himself, writing home:

“I cannot tell you how much more satisfaction it gives me to gain knowledge for myself and for others directly from nature, rather than to be merely learning from others and myself alone.”

“I cannot tell you how much more satisfaction it gives me to gain knowledge for myself and for others directly from nature, rather than to be merely learning from others and myself alone.”

The Prize

In August 1879, aged 22, Hertz won the prize – a gold medal. In a series of highly sensitive experiments he demonstrated that if electric current has any mass at all, it must be incredibly small. We have to bear in mind that when Hertz carried out this work the electron – the carrier of electric current – had not even been discovered. J. J. Thomson’s discovery was made in 1897, 18 years after Hertz’s work.

The Discovery of Radio Waves

If you would like a somewhat more detailed technical account of Hertz’s discovery of radio waves, we have one here.

Well-Equipped Laboratories and Attacking the Greatest Problem

In March 1885, desperate to return to experimental physics, Hertz moved to the University of Karlsruhe. Aged 28, he had secured a full professorship. He was actually offered two other full professorships, a sign of his flourishing reputation. He chose Karlsruhe because it had the best laboratory facilities.

Wondering about which direction his research should take, his thoughts drifted to the prize work Helmholtz had failed to persuade him to do six years earlier: proving Maxwell’s theory by experiment.

Hertz decided that this mighty undertaking would be the focus of his research at Karlsruhe.

A Spark that Changed Everything

After some months of experimental trials, the apparently unbreakable walls that had frustrated all attempts to prove Maxwell’s theory began crumbling.

It started with a spark.

It started with a chance observation early in October 1886, when Hertz was showing students electric sparks. Hertz began thinking deeply about sparks and their effects in electric circuits. He began a series of experiments, generating sparks in different ways.

He discovered something amazing. Sparks produced a regular electrical vibration within the electric wires they jumped between. The vibration moved back and forth more often every second than anything Hertz had ever encountered before in his electrical work.

He knew the vibration was made up of rapidly accelerating and decelerating electric charges. If Maxwell’s theory were right, these charges would radiate electromagnetic waves which would pass through air just as light does.

Producing and Detecting Radio Waves

In November 1886 Hertz constructed the apparatus shown below.

The Oscillator. At the ends are two hollow zinc spheres of diameter 30 cm. The spheres are each connected to copper wires which run into the middle where there is a gap for sparks to jump between.

He applied high voltage a.c. electricity across the central spark-gap, creating sparks.

The sparks caused violent pulses of electric current within the copper wires. These pulses reverberated within the wires, surging back and forth at a rate of roughly 100 million per second.

As Maxwell had predicted, the oscillating electric charges produced electromagnetic waves – radio waves – which spread out through the air around the wires. Some of the waves reached a loop of copper wire 1.5 meters away, producing surges of electric current within it. These surges caused sparks to jump across a spark-gap in the loop.

This was an experimental triumph. Hertz had produced and detected radio waves. He had passed electrical energy through the air from one device to another one located over a meter away. No connecting wires were needed.

Taking it Further

Over the next three years, in a series of brilliant experiments, Hertz fully verified Maxwell’s theory. He proved beyond doubt that his apparatus was producing electromagnetic waves, demonstrating that the energy radiating from his electrical oscillators could be reflected, refracted, produce interference patterns, and produce standing waves just like light.

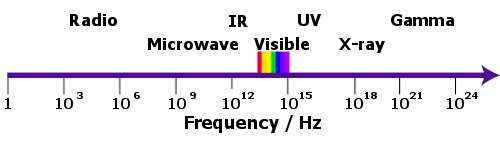

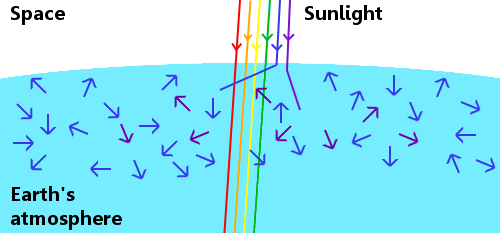

Hertz’s experiment’s proved that radio waves and light waves were part of the same family, which today we call the electromagnetic spectrum.

The electromagnetic spectrum. Hertz discovered the radio part of the spectrum.

Strangely, though, Hertz did not appreciate the monumental practical importance of the electromagnetic waves he had produced.

“I do not think that the wireless waves I have discovered will have any practical application.”

“I do not think that the wireless waves I have discovered will have any practical application.”

This was because Hertz was one of the purest of pure scientists. He was interested only in designing experiments to entice Nature to reveal its mysteries to him. Once he had achieved this, he would move on, leaving any practical applications for others to exploit.

The waves Hertz first generated in November 1886 quickly changed the world.

By 1896 Guglielmo Marconi had applied for a patent for wireless communications. By 1901 he had transmitted a wireless signal across the Atlantic Ocean from Britain to Canada.

Hertz’s discovery was the foundation stone for much of our modern communications technology. Radio, television, satellite communications, and mobile phones all rely on it. Even microwave ovens use electromagnetic waves: the waves penetrate the food, heating it quickly from the inside.

Our ability to detect radio waves has also transformed the science of astronomy. Radio astronomy has allowed us to ‘see’ features we can’t see in the visible part of the spectrum. And because lightning emits radio waves, we can even listen to lightning storms on Jupiter and Saturn.

Scientists and non-scientists alike owe a lot to Heinrich Hertz.

The Photoelectric Effect

In 1887, as part of his work on electromagnetism, Hertz reported a phenomenon that had enormous implications for the future of physics and our fundamental understanding of the universe. It came to be known as the photoelectric effect.

He shone ultraviolet light on electrically charged metal, observing that the UV light seemed to cause the metal to lose its charge faster than otherwise.

He wrote the work up, published it in Annalen der Physik, and left it for others to pursue. It would have been a fascinating phenomenon for Hertz himself to investigate, but he was too wound up in his Maxwell project at the time.

Experimenters rushed to investigate the new phenomenon Hertz had announced.

In 1899 J. J. Thomson, the electron’s discoverer, established that ultraviolet light actually ejected electrons from metal.

This led Albert Einstein to rethink the theory of light. In 1905 he correctly proposed that light came in distinct packets of energy called photons. Photons of ultraviolet light have the right amount of energy to interact with electrons in metals, giving the electrons enough energy to escape from the metal.

Einstein’s explanation of the photoelectric effect was one of the key drivers in constructing an entirely new way of describing atomic-scale events – quantum physics. Einstein was awarded the 1921 Nobel Prize in Physics for explaining the effect Hertz had discovered 34 years earlier.

The photoelectric effect. Photons of UV light carry the correct amount of energy to eject electrons from a metal.\

The German physicist, Wilhelm Conrad Röntgen was the first person to systematically produce and detect electromagnetic radiation in a wavelength range today known as x-rays or Röntgen rays.

His discovery of x-rays was a great revolution in the fields of physics and medicine and electrified the general public. It also earned him the Rumford Medal of the Royal Society of London in 1896 and the first Nobel Prize in Physics in 1901. He is also known for his discoveries in mechanics, heat, and electricity.

Discovery of X-rays:

For decades, he had been studying the effects of electrical charge on the response and appearance of vacuum tubes. The science of electricity was still relatively new, and there remained much to understand. His set-ups used relatively simple components by today’s standards.

He conducted a series of experiments in 1895 in which he connected a type of vacuum tube (visualize a light bulb on steroids) called a Hittorf-Crookes tube to an early and very powerful electrostatic charge generator known as a Ruhmkorff coil, similar to what sparks a car motor to start. He was trying to reproduce a fluorescent effect observed with another type of vacuum tube called a Lenard tube. The filament inside produced a stream of electrons which was well-known, called a cathode ray. To his surprise, this produced fluorescence on a screen coated with a compound called barium platinocyanide, several feet away. This suggested to him that a hitherto unknown, and entirely invisible, effect was being produced. We know now that the cathode ray had excited the atoms of the aluminum to produce X-rays, which in turn excited the atoms of the barium (an element which fluoresces readily).

He also discovered that when his hand passed between the electrically charged vacuum tube and the barium platinocyanide coated screen, he saw his bones. He reproduced this phenomenon with his wife, causing horror.

After secretly confirming his findings, he published an article titled, “On A New Kind Of Rays” (Über eine neue Art von Strahlen) in 1896. This revelation and its nearly immediate application to all sorts of medical imaging earned him an honorary medical degree. He earned the Rumford Medal of the Royal Society of London in 1896 and his Nobel Prize was awarded in 1901.

Niels Bohr completely transformed our view of the atom and of the world. Realizing that classical physics fails catastrophically when things are atom-sized or smaller, he remodeled the atom so electrons occupied ‘allowed’ orbits around the nucleus while all other orbits were forbidden. In doing so he founded quantum mechanics.

Later, as a leading architect of the Copenhagen interpretation of quantum mechanics, he helped to reshape our understanding of how nature operates at the atomic scale.

Niels Bohr’s Contributions to Science

A New Way of Thinking about Atoms

Bohr secured lecturing theoretical physics research focused on understanding the electron’s place in the atom.

Rutherford’s work had revealed atoms were made up of a tiny dense positively charged nucleus. The great majority of an atom’s volume was empty space patrolled in some way by negatively charged electrons.

Bohr knew Rutherford’s picture of the atom disagreed with the laws of classical physics. These said that negatively charged electrons must radiate energy and be pulled into the positively charged nucleus. Even when he wrote his Ph.D. thesis, Bohr stated that it was impossible for classical physics to explain behavior at the atomic scale.

Now he looked to the new quantum physics of Max Planck and Albert Einstein for a solution to the apparently impossible behavior of electrons. In fact, he started on this track in Manchester in 1912.

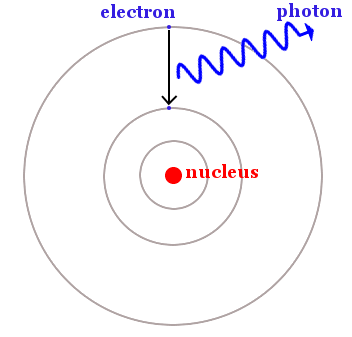

Quantum physics had established that when an object radiates heat or light waves, the emission comes not in a continuous stream, but rather in distinct packets of wave energy.

Einstein called these distinct packets photons. Like all waves, photons have a speed, frequency, and a wavelength.

Planck deduced that the amount of energy carried by a photon could be found by multiplying just two numbers. These were the light’s frequency and a number we now call the Planck constant. His equation said E = hf, where E is energy, h is the Planck constant, and f is frequency.

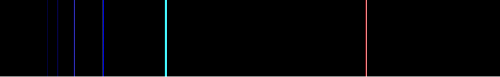

Clearly a photon could only carry an amount of energy that was a multiple of one number – Planck’s constant. All other energies were forbidden. This was the essence of quantum theory – light was allowed to have certain amounts of energy, but was forbidden from having others.